"All Models are Wrong" is not a Good Excuse for Having Wrong Models

A big and embarrassing challenge to DSGE models is Alex Tabarrok’s latest post at Marginal Revolution. Tabarrok comments on a paper that finds two problems with new-Keynesian DSGE modeling. One is that this model/technique cannot predict real-world data very well. Put differently, when we ask DSGE not to forecast but to re-produce real-world data, DSGE is not very good at it. The other problem is that if data is randomly relabeled (say you swap consumption for output), DSGE forecasting may be better than using the data correctly. Embarrassing, Tabarrok concludes.

A defense of DSGE I encountered builds on the aphorism that “all models are wrong, but some are useful.” This aphorism is attributed to statistician George Box. His reference is to statistical models, but other disciplines, such as economics, have embraced it. This post is more about this line of defense than the challenge to raised to DSGE.

I find this phrase misleading, at least how I typically see it used in economics. The issue is that, despite the aphorism’s catchiness, the word “wrong” combines different types of wrongness. A model can be wrong in different ways, and I don’t think all of them are ok.

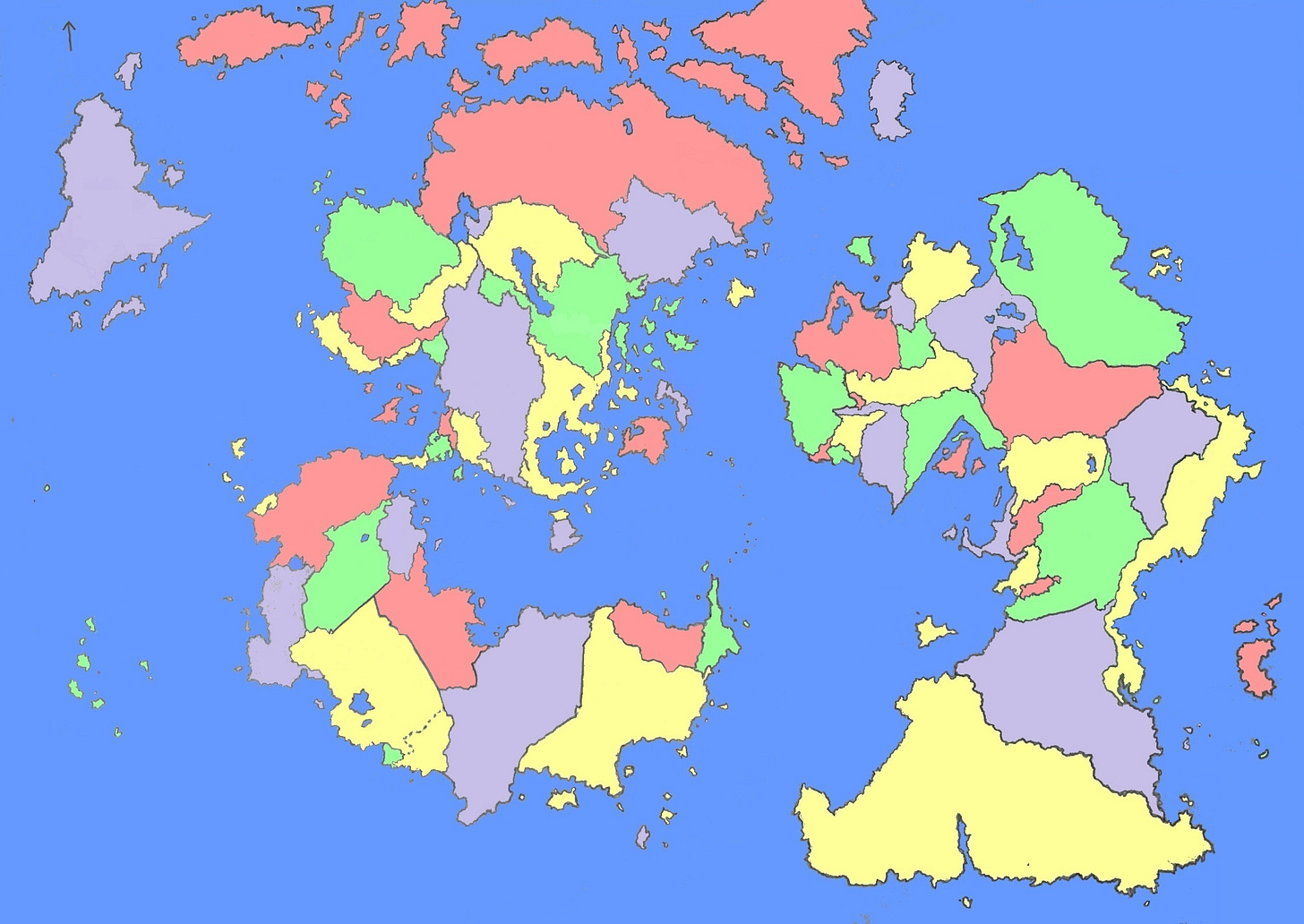

Think of a world map as a model of planet Earth and call this map “model 1.” This model must make several simplifying assumptions to fit the real world into a small map we can use. The model is a simplification yet reflects the real world. We can “see” how the word is captured in this model, even if it is incomplete because of its enormous simplifications. It would be trivial to object that a model like this map is wrong. A map like model 1 may or may not be useful for different purposes (identify political borders, geographical accidents, navigation, and so on). In this sense, all the maps are wrong, but some are still useful.

Assume now model 2, which is also a map. But this map, instead of simplifying the real world, uses assumptions that transform it into something else. The map has no counterpart to reality even if, by accident, it allows you to navigate from point A to point B (prediction or forecast) - maybe not very precisely.

We can say that both maps are “wrong,” yet it is clear that they are wrong in different ways. One model is a realistic simplification of the world, and the other is not. One model explains the real world. The other explains a fictitious world. One model is science, and the other is science fiction. Defending the fictitiousness of model 2 on the basis that model 1 is also “wrong” is a semantic trick that misses the challenge.

There is an important distinction between assumptions that simplify a problem and assumptions that change the problem. It is odd to see a defense of assumptions that change the problem’s nature because simplifying assumptions are needed.

This distinction between simplifying and changing a problem matters if we think the role of science is to explain the real world and, as a byproduct of said explanation, the model will have predictive power. Instead, suppose we think that the purpose of science is only prediction. In that case, the reality of our explanations has no value. An instrumentalist of this type would be indifferent between a fortune teller and a mathematically sophisticated model with similar predictive power.

Maybe the discipline has two types of scientists, those concerned with understanding the real world as it is and those interested in predictive power. The difference is not mere semantics. A realistic model can predict. But it does not follow from a model that can predict that said model is a good representation of reality. Prediction does not equal understanding. If you want, you can predict every day’s sunrise assuming Earth is the center of the universe. If we think that science should talk about the real world, then stating that “all models are wrong” is a poor excuse to have wrong (unrealistic) models.